Some questions and use cases that brought me to investigate into this area:

- Can Kettle be called directly from a database trigger? So when rows get inserted, deleted, changed, I want to call a Kettle transformation.

- Can we call a function in a SQL statement that calculates the fraud probability calculated by a WEKA data mining model?

- Can we enrich some inserted data rows by external data sources?

- Also performance considerations: I dont want to transport the data to the process, I want to process the data were it is

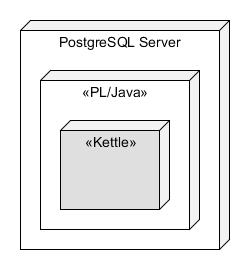

So, lets try to integrate and embed Kettle into PostgreSQL:

Here is a short how to (based on a Windows 7 64 bit machine running PostgreSQL 9.1):

- Download pljava-xxx.tar.gz from http://pgfoundry.org/projects/pljava – PL/Java is needed to call Java from PostgreSQL

- Stop the postgresql Service, make a backup

- After unpacking, follow the instructions given in pljava-x86_64-w64-mingw32-pg9.1-1.4.3/docs/docs/readme.html

Example for modifying the postgresql.conf:

- dynamic_library_path = ‚$libdir;C:/Pentaho/_solutions_samples/realtime/pljava‘

- log_min_messages= info

- custom_variable_classes = ‚pljava‘

- pljava.classpath=C:/Pentaho/_solutions_samples/realtime/pljava/pljava.jar

- [When needed, add and modify:] pljava.vmoptions = ‚-Xmx512M‘

You may need to change the windows system PATH since I found an issue when loading pljava.dll

ERROR: could not load library „[…]/pljava.dll“: The specified module could not be found.

Dependency Walker helped in finding the missing parts, so I added the following to the system PATH (this might be different on your setup and is only an example):

- C:\Program Files\Java\jre6\bin\server

- C:\Program Files\PostgreSQL\9.1\bin

And follow the other steps in the readme (depending on your needs) and finally:

CREATE FUNCTION getsysprop(VARCHAR) RETURNS VARCHAR

AS ‚java.lang.System.getProperty‘

LANGUAGE java;SELECT getsysprop(‚user.home‘);

The result of the query and function call is C:\User\postgres since the PostgreSQL service and the JVM runs as user postgres.

- Now, integrate the kettle-core.jar library for a first test:

SELECT sqlj.install_jar(‚file:///Pentaho/pdi-ee-4.3.0-GA/data-integration/lib/kettle-core.jar‘,’kettle_core‘,false);

SELECT sqlj.set_classpath(‚public‘,’kettle_core‘);

CREATE FUNCTION initCapKettle(VARCHAR) RETURNS VARCHAR AS ‚org.pentaho.di.core.util.StringUtil.initCap‘ LANGUAGE javaU;

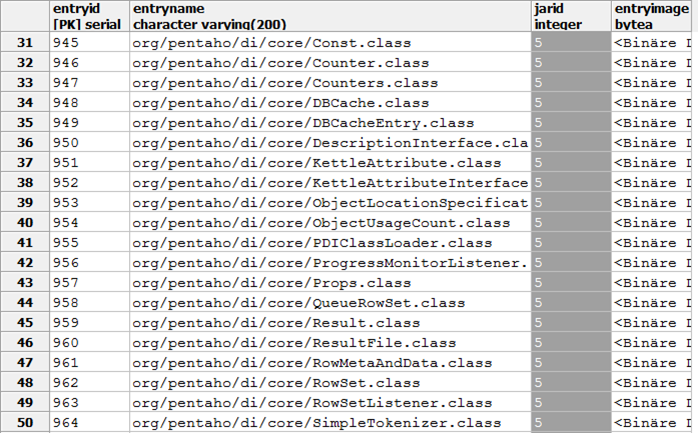

If you like, have a look at the loaded classes in table sql.jar_entry:

After executing select initCapKettle(‚jens‘); you can check if the first letter is changed into a capital ‚J‘ by calling a Kettle method:

So, the first part of the tests worked very well.

Considerations for the future part 2 Testing a Kettle transformation of the prove of concept:

- PL/Java is limited to single threading in the JVM: We need to use the Kettle Single Threading Engine

- We may need to keep instances of the transformation meta definition. When to clean up?

- We may need to define a threshold for processing a specific number of rows in a chunk or after a timeout to avoid excessive burden to the database.

- Im sure there are other unknowns

This was first presented at the Pentaho Community Meeting 2012 in Amsterdam September, 29th 2012 and part 2 with more testings will come soon.